DeepSeekR1满血版和70b蒸馏版接入webman-ai教程

webman-ai 模型介绍

根据webman-ai的规则,我们构建写的模型只需要在plugin/ai/app/handler目录下,新建一个模型handle,然后应用里面有个常见的调用方法:AiModel::init();,通过这个方法会自动入库一个ai model 新模型记录。

创建DeepSeek

namespace plugin\ai\app\handler;

class DeepSeek extends Base

{

/**

* @var string 模型处理器名称

*/

protected static $name = 'DeepSeek';

/**

* @var string 模型类型

*/

protected static $type = 'deepseek';

/**

* @var string[] 支持的模型名称

*/

public static $models = [

'deepseek-chat',

'deepseek-reasoner',

];

/**

* @var string[] 自定义配置

*/

public static $defaultSettings = [

'api' => [

'name' => 'API',

'type' => 'text',

'value' => 'https://api.deepseek.com',

'desc' => 'API 地址',

],

'apikey' => [

'name' => 'ApiKey',

'type' => 'text',

'value' => '',

],

'regFreeCount' => [

'name' => '注册赠送',

'type' => 'number',

'value' => 0,

],

'dayFreeCount' => [

'name' => '每日赠送',

'type' => 'number',

'value' => 0,

],

];

/**

* @var string 处理器

*/

protected $driverClass = driver\DeepSeek::class;

protected static bool $balanceVisible = false;

/**

* 对话

* @param $data

* @param $options

* @return void

*/

public function completions($data, $options)

{

$this->driver = new $this->driverClass($this->getSettings());

$this->driver->completions($data, $options);

}

}创建驱动DeepSeek

路径:plugin/ai/app/handler/driver/DeepSeek.php

<?php

namespace plugin\ai\app\handler\driver;

use Throwable;

use Workerman\Http\Client;

use Workerman\Http\Response;

class DeepSeek extends Gpt

{

/**

* @var string api地址

*/

protected $api = 'https://api.deepseek.com';

protected bool $is_reasoning_start = false;

protected bool $is_reasoning_end = false;

public function completions(array $data, array $options)

{

$data = $this->formatData($data);

if (isset($options['complete'])) {

$options['complete'] = function ($result, Response $response, $apiKey = '') use ($data, $options) {

if (isset($result['error'])) {

return $options['complete']($result, $response, $this->apikey);

}

if (!empty($result['choices'][0]['message']['tool_calls'][0])) {

$options['complete']($result['choices'][0]['message']['tool_calls'][0], $response, $this->apikey);

} else {

$options['complete']($result['choices'][0]['message']['content'], $response, $this->apikey);

}

};

}

if (isset($options['stream'])) {

// 流式返回

$options['stream'] = function ($data) use ($options) {

$data = array_merge(['content' => '', 'reasoning_content' => ''], $data);

$content = $data['choices'][0]['delta']['content'] ?? '';

$reasoning_content = $data['choices'][0]['delta']['reasoning_content'] ?? '';

if (!$content && $reasoning_content) {

if (!$this->is_reasoning_start) {

$this->is_reasoning_start = true;

$reasoning_content = strpos($reasoning_content, '<think>') === false ? '<think>' . $reasoning_content : $reasoning_content;

}

$content = $reasoning_content;

}

if ($content && !$reasoning_content && $this->is_reasoning_start && !$this->is_reasoning_end) {

$this->is_reasoning_end = true;

$reasoning_content = strpos($reasoning_content, '</think>') === false ? $reasoning_content . '</think>' : $reasoning_content;

$content = "\n\n" . $reasoning_content;

}

unset($data['model']);

$data['content'] = $content;

$data['reasoning_content'] = $reasoning_content;

$options['stream']($data);

};

}

$headers = $this->getHeaders($options);

if (isset($options['stream'])) {

$data['stream'] = true;

}

$stream = !empty($data['stream']) && isset($options['stream']);

$options = $this->formatOptions($options);

$requestOptions = [

'method' => 'POST',

'data' => json_encode($data, JSON_UNESCAPED_UNICODE),

'headers' => $headers,

'progress' => function ($buffer) use ($options) {

static $tmp = '';

$tmp .= $buffer;

if ($tmp === '' || $tmp[strlen($tmp) - 1] !== "\n") {

return null;

}

preg_match_all('/data: *?(\{.+?\})[ \r]*?\n/', $tmp, $matches);

$tmp = '';

foreach ($matches[1] ?: [] as $match) {

if ($json = json_decode($match, true)) {

$options['stream']($json);

}

}

},

'success' => function (Response $response) use ($options) {

$result = static::formatResponse((string)$response->getBody());

$options['complete']($result, $response, $this->apikey);

},

'error' => function ($exception) use ($options) {

$options['complete']([

'error' => [

'code' => 'exception',

'message' => $exception->getMessage(),

'detail' => (string)$exception

],

], new Response(0), $this->apikey);

}

];

if (!$stream) {

unset($requestOptions['progress']);

}

$model = $data['model'] ?? '';

$url = $this->api;

if (!$path = parse_url($this->api, PHP_URL_PATH)) {

$url = $this->api . ($this->isAzure ? "/openai/deployments/$model/chat/completions?api-version=$this->azureApiVersion" : "/v1/chat/completions");

} else if ($path[strlen($path) - 1] === '/') {

$url = $this->api . 'chat/completions';

}

$http = new Client(['timeout' => 600]);

$http->request($url, $requestOptions);

}

protected function formatData($data): array

{

$data = parent::formatData($data);

foreach ($data['messages'] as $key => &$message) {

// 去掉用户输入的提示

if ($key <= 1 && $message['role'] === 'assistant') {

unset($data['messages'][$key]);

}

}

$data['messages'] = array_values($data['messages']);

return $data;

}

public static function formatResponse($buffer)

{

if (!$buffer || $buffer[0] === '') {

return [

'error' => [

'code' => 'parse_error',

'message' => 'Unable to parse response',

'detail' => $buffer

]

];

}

$json = json_decode($buffer, true);

if ($json) {

return $json;

}

$chunks = explode("\n", $buffer);

$content = '';

$reasoning_content = '';

$finishReason = null;

$model = '';

$promptFilterResults = null;

$contentFilterResults = null;

$contentFilterOffsets = null;

$toolCalls = [];

foreach ($chunks as $chunk) {

$chunk = trim($chunk);

if ($chunk === "") {

continue;

}

if (strpos($chunk, 'data:{') === 0) {

$chunk = substr($chunk, 5);

} else {

$chunk = substr($chunk, 6);

}

if ($chunk === "" || $chunk === "[DONE]") {

continue;

}

try {

$data = json_decode($chunk, true);

if (isset($data['model'])) {

$model = $data['model'];

}

if (isset($data['prompt_filter_results'])) {

$promptFilterResults = $data['prompt_filter_results'];

}

if (isset($data['error'])) {

$content .= $data['error']['message'] ?? "";

$reasoning_content .= $data['error']['message'] ?? "";

} else {

$choices = $data['choices'] ?? [];

foreach ($choices as $index => $item) {

$delta_content = $item['delta']['content'] ?? '';

$delta_reasoning_content = $item['delta']['reasoning_content'] ?? '';

if (!$delta_content && $delta_reasoning_content) {

if ($index == 0) {

$delta_reasoning_content = strpos($delta_reasoning_content, '<think>') === false ? '<think>' . $delta_reasoning_content : $delta_reasoning_content;

} elseif ($index === count($choices) - 1) {

$delta_content = strpos($delta_content, '</think>') === false ? $delta_content . '</think>' : $reasoning_content;

}

}

$content .= $delta_content;

$reasoning_content .= $delta_reasoning_content;

foreach ($item['delta']['tool_calls'] ?? [] as $index => $function) {

$key = $function['index'] ?? $index;

if (!empty($function['function']['name'])) {

$toolCalls[$key] = $function;

} elseif (!empty($function['function']['arguments'])) {

$toolCalls[$key]['function']['arguments'] .= $function['function']['arguments'];

}

}

if (isset($item['finish_reason'])) {

$finishReason = $item['finish_reason'];

}

if (isset($item['content_filter_results'])) {

$contentFilterResults = $item['content_filter_results'];

}

if (isset($item['content_filter_offsets'])) {

$contentFilterOffsets = $item['content_filter_offsets'];

}

}

}

} catch (Throwable $e) {

echo $e;

}

}

$result = [

'choices' => [

[

'finish_reason' => $finishReason,

'index' => 0,

'message' => [

'role' => 'assistant',

'content' => $content,

'reasoning_content' => $reasoning_content,

],

]

],

'model' => $model,

];

if ($promptFilterResults) {

$result['prompt_filter_results'] = $promptFilterResults;

}

if ($contentFilterResults) {

$result['choices'][0]['content_filter_results'] = $contentFilterResults;

}

if ($contentFilterOffsets) {

$result['choices'][0]['content_filter_offsets'] = $contentFilterOffsets;

}

if ($toolCalls) {

$result['choices'][0]['message']['tool_calls'] = array_values($toolCalls);

}

return $result;

}

}主要关注reasoning_content 的相关处理,有些模型接口会返回<think>...</think>对,大部分是返回的是reasoning_content字段(比如:满血版的api)。

我这里针对stream模式和非stream模式做了处理,具体处理方式就是统一对齐将思考过程的tokens 前后加上<think> 和</think>, 形成标准的思考格式。

最后,我根据上面的思考格式在调整了webman-ai的核心js,这个下面讲。

webman-ai 角色介绍

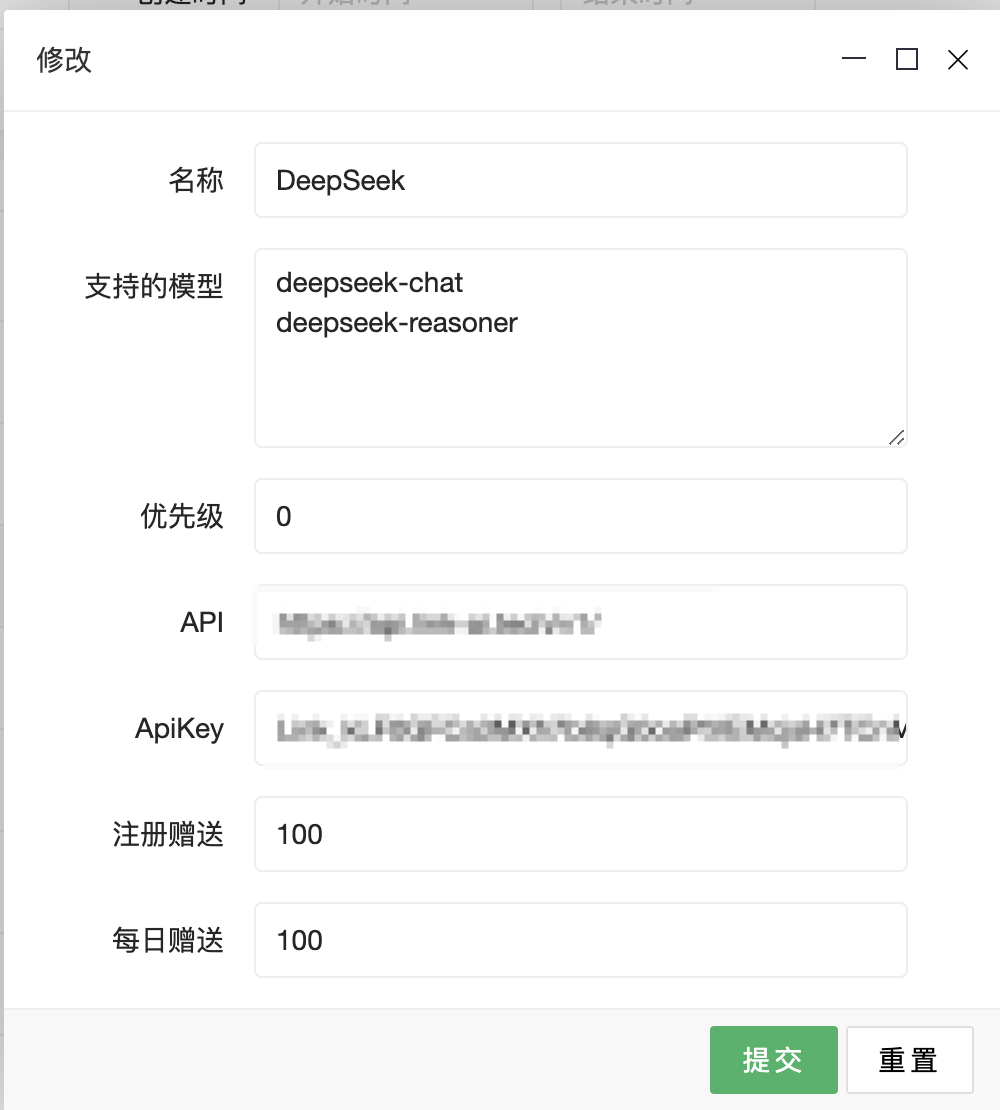

根据上面,我们有了模型,接着我们就可以创建一个新的ai角色,创建角色选择模型,就搞定。不过这里模型的数据源需要说明下:

在AI通用设置里查看,如果没有就配置

AI通用设置

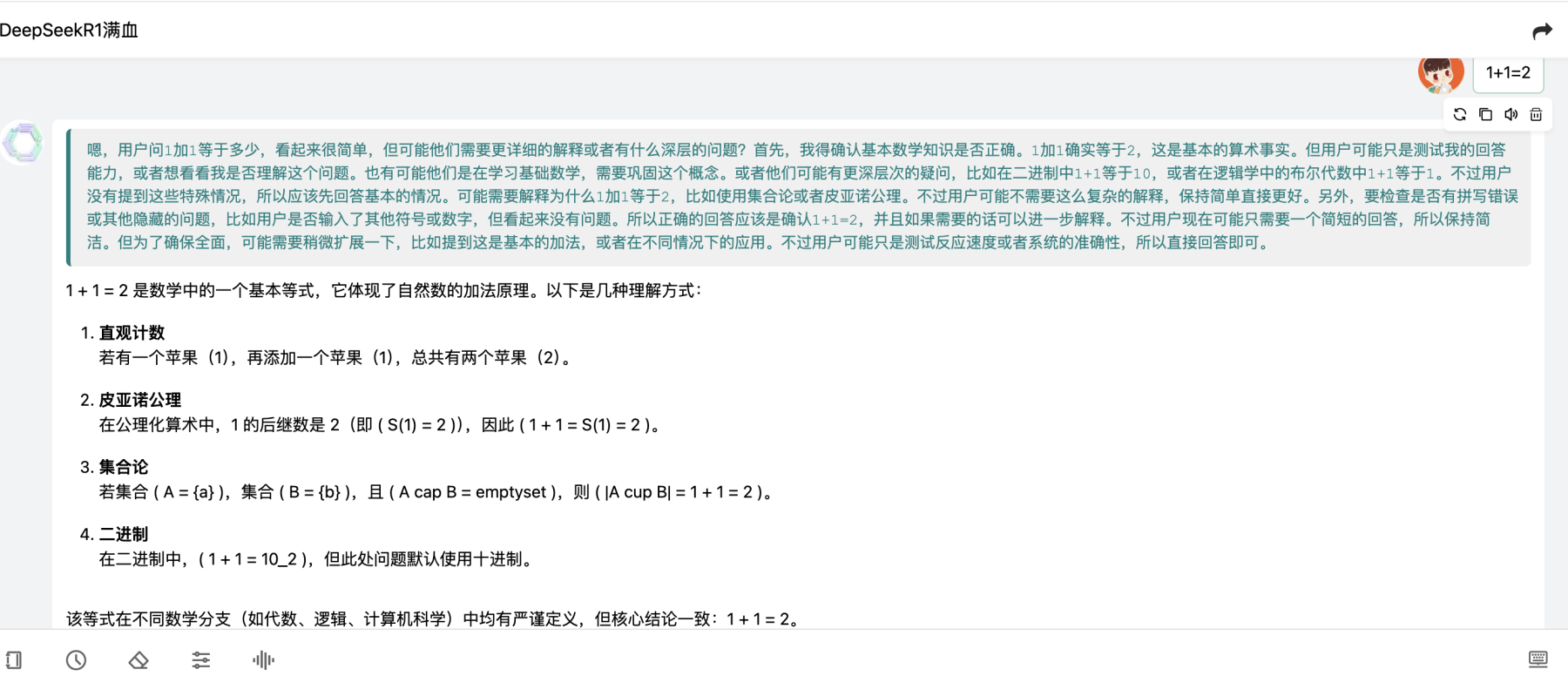

webman-ai app.js 调整

这个操作不是必须,也可以在后端那里自己处理。

markdown方法,将think格式换成div格式

markdown(content) {

// 如果内容为字符串或数字,直接处理,否则转为字符串

content = (typeof content === "string") || (typeof content === "number") ? content : JSON.stringify(content);

if (this.chat.model.includes('deepseek')) {

if (content.includes('<think>')) {

content = content.replace(/<think>/g, '<div class="deepseek-think">');

content = content.replace(/<\/think>/g, '</div>');

}

}

// 渲染 Markdown 内容,最终返回渲染后的 HTML

return this.md.render(content || '');

}, .deepseek-think {

font-family: 'Courier New', monospace;

color: #008080;

background-color: #f0f0f0;

padding: 10px;

margin: 10px 0;

border-radius: 5px;

/* 为左侧添加竖线 */

border-left: 4px solid #008080;

padding-left: 15px;

/* 调整字体大小 */

font-size: 14px;

line-height: 1.5;

}效果

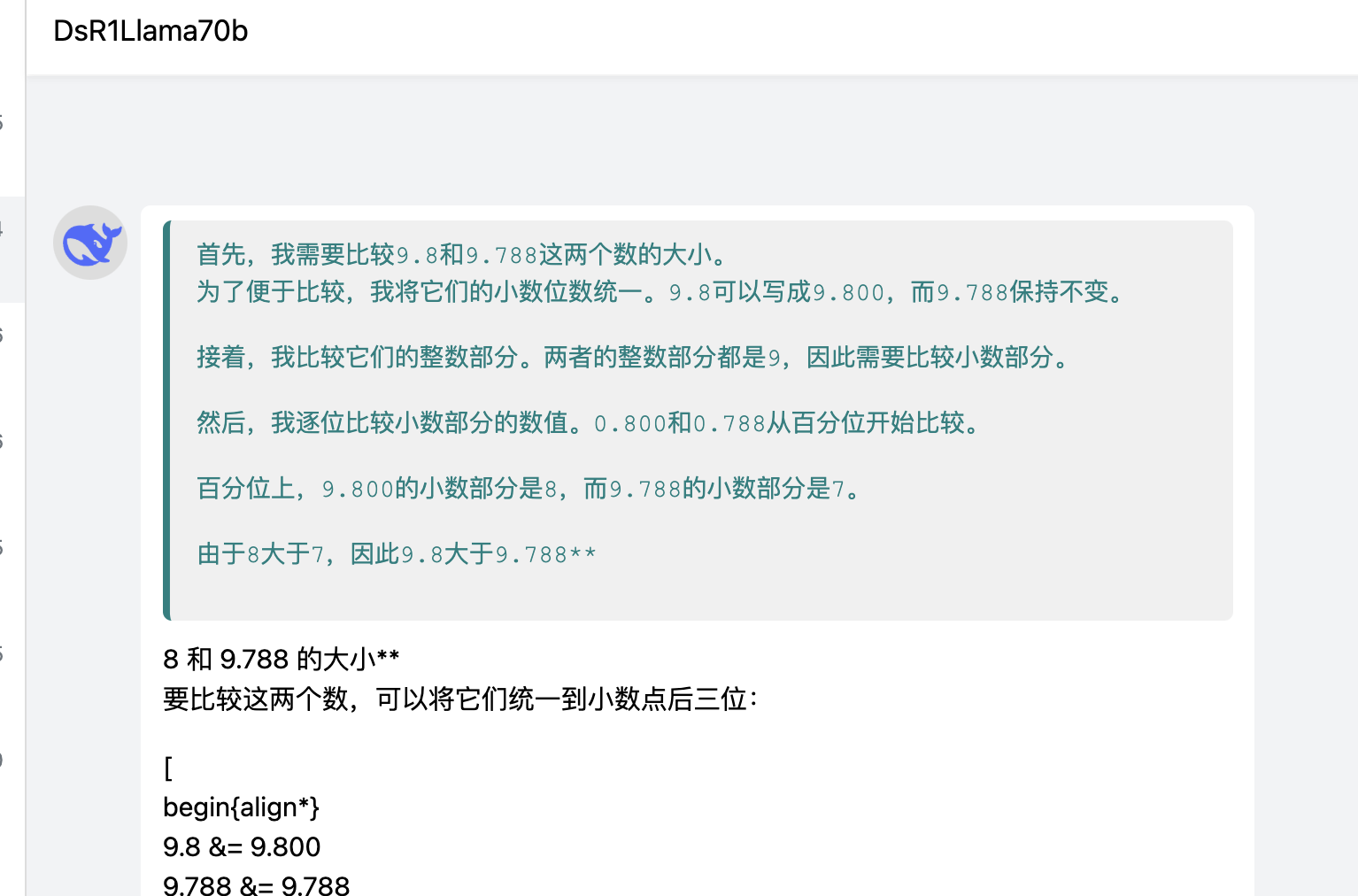

测试验证

我的观察,思考过程的结尾是 content为:\n\n, 不返回reasoning_content或者reasoning_content=''。因此,如果有问题,大家可以根据这个观察处理,并可以把结果发在评论区

494 1 3

蒸馏版api可用:阿里云等

满血版api可用:阿里云、硅基流动、火山、百度云、LinkAI、aizex